FG4 Multibox Manual

Document version: 6.0

Firmware version: 6.0

Document version: 6.0

Firmware version: 6.0

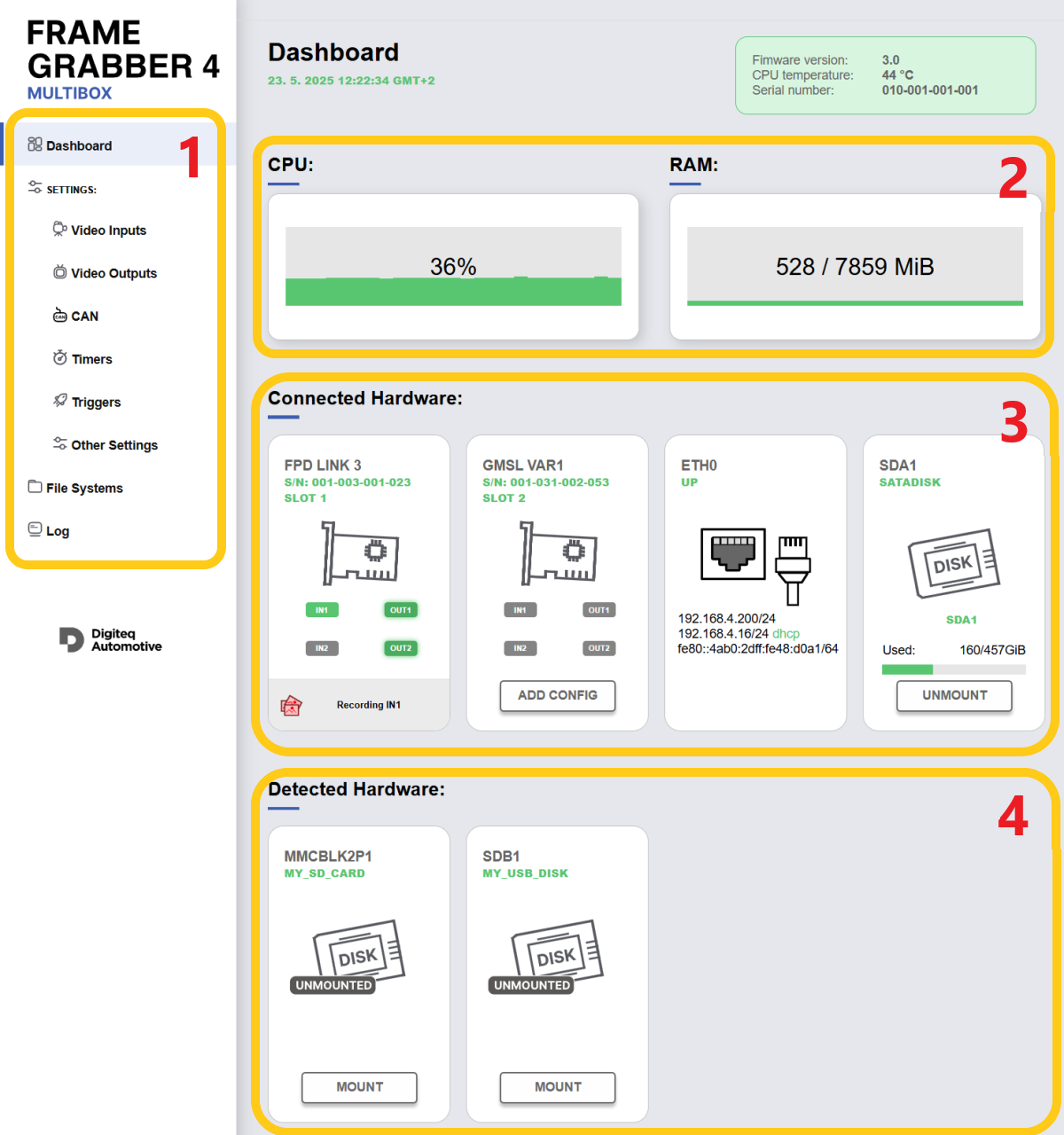

FG4 Multibox (aka. MGB4) is a device, whose primary purpose is capturing/generating video streams from/to specific hardware interfaces. For this the box uses FG4 PCIe cards, each one equipped with appropriate interface module (e.g. FPDL3, GMSL). Both FG4 Multibox and FG4 PCIe cards are products of Digiteq Automotive.

System on Module (SOM) provides the main computing power. Currently Nvidia Jetson TX2 or TX2i is used as SOM. It contains Dual-Core Nvidia Denver 2 and Quad-Core ARM Cortex-A57 CPUs, 256-core Nvidia Pascal GPU, 8GB LPDDR4 RAM and 32GB eMMC storage. It also contains many peripherals, but not all are used or they are used in specific configuration.

Mainboard interconnects all participated electronic components into one functional unit. Except connectors it also contains many other active elements, like power supply, power management IC (PMIC), RTC battery, CAN drivers, etc. Especially the PMIC is very important, as it controls the onboard power supply, power on/off sequences and also external triggers. It also provides the ability to automatically power-on the FG4 Multibox, either when it gets connected to power supply or when configured events occur on external triggers.

PCIe switch board expands the Nvidia Jetson TX2/TX2i single PCIe 2.0 x4 interface and allows to use up to five PCIe cards.

FG4 Multibox is Linux based device. The behavior of all subsystems (e.g. system time, video, network, CAN), the way of connecting storage devices or handling system logs, all that stuff is Linux based and this fact is clearly reflected in behavior of API. So it is quite common, that the API provided by FG4 Multibox noticeably resembles the API provided by underlying Linux operating system. Also the behavior of internal services (e.g. http server, ntp client, secure shell), the list of supported features (e.g. file systems) or the list of supported external devices (e.g. CAN or network cards) are all closely dependent on used Linux distribution and kernel.

Used Linux distribution:

Customized Ubuntu 18.04 (Bionic Beaver)

Used Linux kernel:

GNU/Linux 4.9.201-mgb4 aarch64, customized kernel based on Nvidia Tegra Linux, tag tegra-l4t-32.5

Although there are running many Linux services, only a few of them expose their API and also form the core FG4 Multibox functionality.

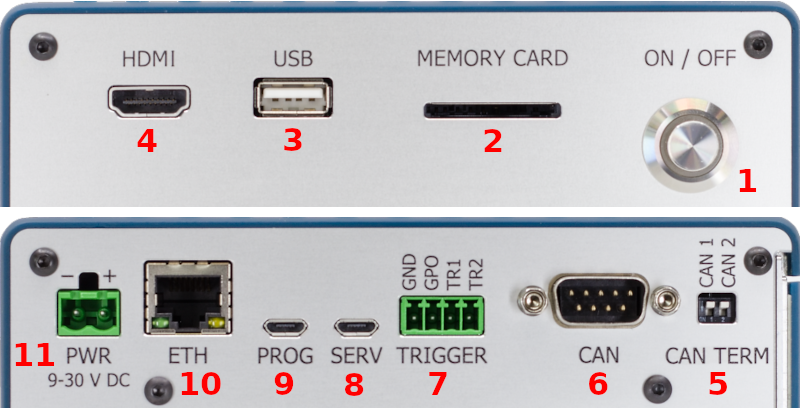

Short press of the power button triggers the FG4 Multibox power-on or power-off sequences. Power-off sequence may also be triggered by API. Power-on sequence may also be triggered automatically by Mainboard PMIC. There exist more types of power sequences and they all may be trigger only by API. The progress of power sequences is indicated by Power button LED.

Power button LED indicates some significant states of FG4 Multibox.

NOTE:

Current firmware doesn't support CAN wake-up triggers. The blue color only means, that CAN transceivers are powered, but the waking functionality is not implemented yet.

Full-size SD card interface supporting up to SDR104 card mode (UHS-1). Working with storage devices is described in Storage chapter.

USB 2.0, Type-A, Host mode. Multiple device types may be connected via USB interface. See Storage, Network or CAN chapters to get detailed information about working with these devices.

HDMI 2.0, Type A (standard) or C (mini), depending on particular FG4 Multibox hardware revision. The interface is intended for connecting external display, where an useful content may be shown. See Screen chapter to get detailed information about connected display. See GUI chapter to get information about shown content.

DIP switches, that allow to connect internal termination resistors to embedded CAN busses 1 and 2. When the switch is in position ON (pulled down), internal rezistor 120Ω is connected between CAN high (CANH) and CAN low (CANL) signals.

Standard 9-pin CANON male connector, that provides access to embedded CAN busses 1 and 2. The physical layer complies with high-speed CAN (HS-CAN) as defined in ISO 11898-2:2016 and SAE J2284-1 to SAE J2284-5. FG4 Multibox contains two embedded CAN devices, the first one (usually named as can0 in API) is connected to the bus 1, the second one (usually named as can1 in API) is connected to the bus 2. Working with CAN devices is described in CAN chapter.

| 1 | - not connected |

| 2 | - CAN low (CAN bus 1) |

| 3 | - CAN GND |

| 4 | - CAN low (CAN bus 2) |

| 5 | - not connected |

| 6 | - not connected |

| 7 | - CAN high (CAN bus 1) |

| 8 | - CAN high (CAN bus 2) |

| 9 | - not connected |

External triggers TR1 and TR2 are hardware inputs, that can be used to trigger various events within the FG4 Multibox. The inputs are directly connected to Mainboard PMIC, so their properties can also be set via Mainboard PMIC, e.g. connecting pull-up or pull-down resistors, setting the specific triggering edge etc. They are part of Trigger system, so the mapping of events can also be set via Trigger system, e.g. capturing the video frame, rendering the specific trigger mark in video frame etc.

Connector type: DEGSON 15EDGK-3.5-04P-14

| Low level input voltage | : <0.8V |

| High level input voltage | : >2.0V |

| Maximum input voltage | : ±35.0V |

| Internal pull-up/down resistors | : 40-130kΩ |

| Input current (no pull-up/down) | : 3-180nA@3.3V |

USB 2.0, Micro-B, Device mode (FTDI, FT232R USB UART, idVendor=0403, idProduct=6001), 115200 8n1. This serial device provides access to the FG4 Multibox U-boot and Linux console.

USB 2.0, Micro-B, Device mode (NVIDIA Corp., APX, idVendor=0955, idProduct=7c18). This device is provided by Nvidia Jetson TX2/TX2i SOM booted in recovery mode. The interface is intended only for Digiteq Automotive factory programming of FG4 Multibox.

Ethernet 10/100/1000 BASE-T, RJ45. Working with network devices is described in Network chapter. This network device is usually named as eth0 in API.

| Input voltage | : 10-30V DC | |

| Idle current | : 20mA@12V | | Power-off state |

| Estimated max. power | : (20 + 15n) W | | Heavy computing load |

| Estimated min. power | : (13 + 10n) W | | No computing load |

where

n - number of inserted FG4 PCIe cards

E.g.

When only one FG4 PCIe card is inserted, power supply 12V/3A should be enough.

When five FG4 PCIe cards are inserted, power supply 12V/8A should be enough.

Connector type: DEGSON 2EDGK-5.0-02P-14

Next chapters describe the non-gui application interface, intended to be used directly from programming languages, e.g. some automation tools or gui applications. Web application providing the GUI also uses this API as its backend. Please, read the Services chapter first, as this describes the API fundamental behavior and also defines the terms, that are used by all following API related chapters.

Many chapters contain examples, that show how to communicate with API from OS shell by using some commonly available tools (curl, nc, wscat, ...). These examples are written for Linux Bash, so they may be copy-pasted into this shell directly. When using other shells (e.g. Windows cmd), some modifications may be required.

Most of the FG4 Multibox functionality is executed by its internal sotware services, each one with its special purpose. Some of these services expose their API, so they can be controlled by user. The API is exposed in different ways, e.g. each video stream is available on its dedicated TCP server, file sharing is done through Samba server, but the most functionality is available through HTTP server.

The most important services are:

They all expose their API and also form the core functionality of FG4 Multibox.

HTTP server listens on TCP port 80.

Samba server listens on TCP ports 139 and 445.

NTP server listens (if enabled) on UDP port 123.

Dedicated TCP servers (e.g. video stream servers) have no fixed listening TCP ports, as they are configurable by user.

No username or password is required.

This is the most important service as this one is responsible for control over the biggest part of the whole system, e.g. setting video, network and CAN interfaces, mounting storage drives etc.

Most of the functionality is available through HTTP server at URL path /api/app/*. The service also provides dedicated TCP servers (e.g. video stream servers). Next description refers only to communication through HTTP server.

The service functions may take or return some data. In this case, data are always passed in message body as a single JSON object. For information about JSON object see RFC8259.

The service uses the same status codes for all its functions, 200 (ok) or 202 (accepted) for success, whatever else for a kind of error. The service itself uses only 400 (bad request), 422 (unprocessable entity) and 500 (internal server error), but another error status codes may be returned if transaction fails somewhere on its route, e.g. 502 (bad gateway). In case of error, the service may return detailed error description in output data, JSON schema is available here. Status codes 202 (accepted) and 422 (unprocessable entity) are used only by actions.

The service can be viewed as hierarchy of functional objects, each one responsible for specific part of the system. Each object has its own invokable actions, readable and writable configuration properties and readable status properties. Mainly due to this characteristics the API is divided into separated parts.

This part of API allows the object to perform an immediate activity, this is done by invoking its action. Actions are available at URL path /api/app/actions/*. The list of currently available (at runtime) actions can be obtained by GET verb at URL path /api/app/actions. The list of all existing actions, including JSON schemas of input and output data, is available here. There exist two types of actions, synchronous and asynchronous.

Synchronous action finishes immediately. It is invoked by POST verb on required action name and the result is returned in response. Status code 200 (ok) means, that the action finished with success (output data are present in response), status code 422 (unprocessable entity) means, that the action finished with error, another status code means another kind of error (action failed in some unexpected way or didn't be even started).

Asynchronous action takes some time to finish, so the result is not available immediately, instead it must be polled later. Action is invoked by POST verb on required action name. Status code 202 (accepted) means, that the action was started (but not finished), another status code means a kind of error. Result is obtained by GET verb on the same action name. Status code 202 (accepted) means, that the action has not finished yet, status code 200 (ok) means, that the action finished with success (output data are present in response), status code 422 (unprocessable entity) means, that the action finished with error, another status code means another kind of error.

List available actions

curl -v -X GET 'http://192.168.1.200/api/app/actions'Get system time

curl -v -X POST 'http://192.168.1.200/api/app/actions/time/system_time/get'Echo some JSON data

curl -v -X POST -H 'Content-Type: application/json' -d '"hello"' 'http://192.168.1.200/api/app/actions/echo'This part of API allows to set the object into required state, this is done by setting its configuration properties. Configuration is available at URL path /api/app/config and corresponding JSON schema is available here. It is possible to address only part of configuration, which is done by specifying the appropriate JSON pointer in URL path (/api/app/config<json_pointer>, /api/app/config/point/to/something). For information about JSON pointer see RFC6901. There are several HTTP verbs, than can be used on configuration and that are similar to known CRUD operations:

GET - reads existing configuration. Optional JSON pointer in URL path must point to existing object. Output data in message body. No input data.

PUT - updates (by replacing) existing configuration. Optional JSON pointer in URL path must point to existing object. Input data in message body. No output data.

PATCH - updates (by patching) existing configuration. Optional JSON pointer in URL path must point to existing object. Input data (JSON patch) in message body. No output data. For information about JSON patch see RFC6902

POST - creates new configuration. Required JSON pointer in URL path must meet some criteria to work properly. Let's have a pointer /x/y/z. In this case object pointed by /x/y must exist and /x/y/z will be created (if not exists yet) or replaced (if already exists). Input data in message body. No output data.

DELETE - deletes existing configuration. Required JSON pointer in URL path must meet some criteria to work properly. Let's have a pointer /x/y/z. In this case object pointed by /x/y must exist and /x/y/z will be deleted (if exists) or nothing will be done (if not exists). No input data. No output data.

When any method able to change the existing configuration fails, then the configuration remains in its previous state, no partial changes are made.

The provided configuration must always be valid against the currently supported version of schema. But there is one exception, that is called configuration import. It is allowed to provide configuration of lower version than currently supported. In this case the configuration is imported to the current version. Note that provided configuration of lower version still must be valid against its schema (of matching lower version).

It was mentioned, that provided configuration must always be valid against its schema. This is the first and fundamental check, that is performed with the received configuration. But there may be additional checks, especially in situations, that cannot be easily detected by schema. Currently there exists only one additional check, which doesn't allow to assign the same TCP port to multiple TCP servers.

Get configuration of the whole system

curl -v -X GET 'http://192.168.1.200/api/app/config'Check if NTP client is enabled

curl -v -X GET 'http://192.168.1.200/api/app/config/time/ntp/enabled'Enable NTP client

curl -v -X PUT -H 'Content-Type: application/json' -d 'true' 'http://192.168.1.200/api/app/config/time/ntp/enabled'Enable NTP client and trigger file writer

curl -v -X PATCH -H 'Content-Type: application/json' -d '{"time":{"ntp":{"enabled":true}},"trigger":{"file_writer":{"enabled":true}}}' 'http://192.168.1.200/api/app/config'This part of API allows to observe real state of the object, this is done by getting its status properties. Status is available at URL path /api/app/status and corresponding JSON schema is available here. It is possible to address only part of status, which is done by specifying the appropriate JSON pointer in URL path (/api/app/status<json_pointer>, /api/app/status/point/to/something). Getting status is done by GET verb.

Internally the status properties are updated with period of about 1 second. So it has no sense to get the status more often.

Get status of the whole system

curl -v -X GET 'http://192.168.1.200/api/app/status'Check if NTP client is really running

curl -v -X GET 'http://192.168.1.200/api/app/status/time/ntp/running'Get CPU info

curl -v -X GET 'http://192.168.1.200/api/app/status/cpu'This part of API allows to get all JSON schemas used by the service. Schemas are available at URL path /api/app/schemas. It is possible to address only part of schemas, which is done by specifying the appropriate JSON pointer in URL path (/api/app/schemas<json_pointer>, /api/app/schemas/point/to/something). Getting schemas is done by GET verb. There are four important parts.

/api/app/schemas/error - JSON schema of error (see here)

/api/app/schemas/actions - JSON schemas of actions data (see here)

/api/app/schemas/config - JSON schema of configuration (see here)

/api/app/schemas/status - JSON schema of status (see here)

Get all JSON schemas

curl -v -X GET 'http://192.168.1.200/api/app/schemas'Get JSON schema of error

curl -v -X GET 'http://192.168.1.200/api/app/schemas/error'Get JSON schema of configuration

curl -v -X GET 'http://192.168.1.200/api/app/schemas/config'This service provides access to system log. These may be useful to create a more detailed look at the whole system and possibly to help with some debugging.

The service is available through HTTP server at URL path /api/log/*.

The service uses the same status codes for all its functions, 200 (ok) for success, whatever else for a kind of error. The service itself uses only 400 (bad request) and 500 (internal server error), but another error status codes may be returned if transaction fails somewhere on its route, e.g. 502 (bad gateway).

The service uses the same websocket return codes for all its websocket functions, 1000 for success, whatever else for some kind of error. Reason string may be filled with detailed information.

The service uses the same form of passing parameters for all its functions. It uses the query string in pretty standard form, e.g. /api/log/file?what=app&count=10.

Log file can be downloaded by GET verb at URL path /api/log/file. Parameters may be specified to control the content of the log file.

what - identifies the log source. Possible values are all (all sources), kernel (kernel source) and app (application service source). Default value is all.

count - determines the number of required historical records to be sent. If equal -1, then all records are sent. Default value is -1.

since, until - sents only records on or newer than the specified date, or on or older than the specified date, respectively. Date specifications should be of the format YYYY-MM-DD HH:MM:SS. If the time part is omitted, 00:00:00 is assumed. If only the seconds component is omitted, :00 is assumed. If the date component is omitted, the current day is assumed. Alternatively the strings yesterday, today, tomorrow are understood, which refer to 00:00:00 of the day before the current day, the current day, or the day after the current day, respectively. now refers to the current time. Finally, relative times may be specified, prefixed with - or +, referring to times before or after the current time, respectively.

Get all records of the whole system

curl -v -X GET 'http://192.168.1.200/api/log/file'Get today's records of the whole system

curl -v -X GET 'http://192.168.1.200/api/log/file?since=today'Get last 10 records of application service

curl -v -X GET 'http://192.168.1.200/api/log/file?what=app&count=10'Live log can be received by connecting to websocket at URL path /api/log/feed. Parameters may be specified to control the content of the log data.

what - identifies the log source. Possible values are all (all sources), kernel (kernel source) and app (application service source). Default value is all.

count - determines the number of required historical records to be sent. If equal -1, then all records are sent. Default value is 10.

follow - determines the state of log feed after sending required count of historical records. If equal 0, then after sending required count of historical records the feed closes immediately. If equal 1, then after sending required count of historical records the feed remains open to provide future records. Default value is 1.

Connect to feed of the whole system (send 10 historical records before waiting for new records)

wscat --connect 'http://192.168.1.200/api/log/feed'Connect to feed of the whole system (send no historical records before waiting for new records)

wscat --connect 'http://192.168.1.200/api/log/feed?count=0'The whole log can be cleared by POST verb at URL path /api/log/clear.

Clear log

curl -v -X POST 'http://192.168.1.200/api/log/clear'This service provides access to available file systems. This means either mounted devices (e.g. internal hard disk drive, USB drive, SD card) or another specific places (e.g. directory for uploading new firmware).

The service is available through HTTP server at URL path /api/fs/*. In this case WebDAV is used to access the file system. When GET verb is used onto existing directory, then list (in JSON format) of files is returned. Both read and write operations may be possible.

The service is also available through HTTP server at URL path /www/fs/*. In this case the file system is presented as ordinary static web page, so only GET verb can be used. Only read operations are possible.

The service is also available through Samba server. Both read and write operations may be possible.

Currently mounted devices are available at URL paths /api/fs/mounts/ and /www/fs/mounts/ in case of using HTTP server, or at URL path /mounts/ in case of using Samba server. The top level content of these URL paths represents the storage mount points. See Storage chapter for detailed information about storage devices and their mount points.

There exists a special file system place, that is intended only for control of firmware update process. This place is available at URL paths /api/fs/fw/ and /www/fs/fw/ in case of using HTTP server, or at URL path /fw/ in case of using Samba server. See Firmware chapter for detailed information about firmware update.

Get list of mount points (via HTTP, WebDAV). Both variants are possible.

curl -X PROPFIND 'http://192.168.1.200/api/fs/mounts/'

curl -X GET 'http://192.168.1.200/api/fs/fw/'Get content of firmware upload directory (via HTTP, WebDAV). Both variants are possible.

curl -X PROPFIND 'http://192.168.1.200/api/fs/fw/'

curl -X GET 'http://192.168.1.200/api/fs/fw/'Get content of satadisk mount point directory (via HTTP, WebDAV). Both variants are possible.

curl -X PROPFIND 'http://192.168.1.200/api/fs/mounts/satadisk/'

curl -X GET 'http://192.168.1.200/api/fs/mounts/satadisk/'Upload video.ts file to satadisk mount point directory (via HTTP, WebDAV)

curl -T video.ts 'http://192.168.1.200/api/fs/mounts/satadisk/video.ts'Download video.ts file from satadisk mount point directory (via HTTP, WebDAV)

curl -o file.ts 'http://192.168.1.200/api/fs/mounts/satadisk/video.ts'Delete video.ts file from satadisk mount point directory (via HTTP, WebDAV)

curl -X DELETE 'http://192.168.1.200/api/fs/mounts/satadisk/video.ts'Move/Rename video.ts file to video/video.ts file within the mount point satadisk (via HTTP, WebDAV). Directory video must already exist. Both variants are possible.

curl -X MOVE -H 'Destination:http://192.168.1.200/api/fs/mounts/satadisk/video/video.ts' 'http://192.168.1.200/api/fs/mounts/satadisk/video.ts'

curl -X MOVE -H 'Destination:/api/fs/mounts/satadisk/video/video.ts' 'http://192.168.1.200/api/fs/mounts/satadisk/video.ts'Copy video.ts file to video/video.ts file within the mount point satadisk (via HTTP, WebDAV). Directory video must already exist. Both variants are possible.

curl -X COPY -H 'Destination:http://192.168.1.200/api/fs/mounts/satadisk/video/video.ts' 'http://192.168.1.200/api/fs/mounts/satadisk/video.ts'

curl -X COPY -H 'Destination:/api/fs/mounts/satadisk/video/video.ts' 'http://192.168.1.200/api/fs/mounts/satadisk/video.ts'Create video directory in satadisk mount point directory (via HTTP, WebDAV)

curl -X MKCOL 'http://192.168.1.200/api/fs/mounts/satadisk/video/'Delete video directory from satadisk mount point directory (via HTTP, WebDAV)

curl -X DELETE 'http://192.168.1.200/api/fs/mounts/satadisk/video/'Move/Rename video directory to bar/video directory within the mount point satadisk (via HTTP, WebDAV). Directory bar must already exist. Both variants are possible.

curl -X MOVE -H 'Destination:http://192.168.1.200/api/fs/mounts/satadisk/foo/videos/' 'http://192.168.1.200/api/fs/mounts/satadisk/videos/'

curl -X MOVE -H 'Destination:/api/fs/mounts/satadisk/foo/videos/' 'http://192.168.1.200/api/fs/mounts/satadisk/videos/'Copy video directory to bar/video directory within the mount point satadisk (via HTTP, WebDAV). Directory bar must already exist. Both variants are possible.

curl -X COPY -H 'Destination:http://192.168.1.200/api/fs/mounts/satadisk/foo/videos/' 'http://192.168.1.200/api/fs/mounts/satadisk/videos/'

curl -X COPY -H 'Destination:/api/fs/mounts/satadisk/foo/videos/' 'http://192.168.1.200/api/fs/mounts/satadisk/videos/'FG4 Multibox power-on and power-off sequences are triggered by short press of the power button. There exist another power operations, power-cycle, reboot and reload. Power-cycle can be viewed as sequence of power-off and power-on operations, during this process power supply is cut off to the most of box internal circuits. On the other hand, reboot is just hot restart, without power supply cut. Reload is least invasive as it only restarts application service, use of this operation is intended only for debug purposes. The progress of power sequences is indicated by Power button LED.

Power-off, power-cycle, reboot and reload operations can be triggered by remote API served by application service, available at URL path /api/app/actions/power/*. Just call appropriate nonparametric synchronous action

/api/app/actions/power/poweroff/api/app/actions/power/powercycle/api/app/actions/power/reboot/api/app/actions/power/reloadPower-off

curl -v -X POST 'http://192.168.1.200/api/app/actions/power/poweroff'Reboot

curl -v -X POST 'http://192.168.1.200/api/app/actions/power/reboot'There exist two main types of FG4 Multibox firmware packages. The first one is factory package, which is intended to be installed only in Digiteq Automotive company. This package contains all possible firmware components, e.g. boot configuration tables, bootloaders, rescue and application file systems, PMIC and FG4 card firmwares, etc. The second one is runtime package, which is intended to be installed by ordinary users. This package contains subset of factory package. Only runtime firmware update is described in this manual.

First, the firmware package mgb4-install.tgz must be uploaded to FG4 Multibox. This can be done by remote API served by filesystem service, either by using HTTP (WebDAV) server available at URL path /api/fs/fw/ or by using Samba server at URL path /fw/. In fact, both URL paths reference the same directory on the internal eMMC storage. So just upload the firmware package to this directory.

Second, to appropriately execute the update process, some control flags must be given. These control flags are represented by pure empty files, located at the same place as firmware package is uploaded. To start the update process on next boot, create empty file named as mgb4-flash. To keep previous configuration, create empty file named as mgb4-keep.

Finally, reboot FG4 Multibox. Next boot, if control flag mgb4-flash is found, box enters rescue mode (indicated by pink power button LED) and if firmware package is found, update process is started. When the process is finished (may take several minutes), box reboots into new system. During this boot another reboots may occur as mainboard PMIC and FG4 cards firmware update (indicated by yellow power button LED) may be required. When the power button LED is green, box is ready.

Runtime firmware update may be also accomplished by using SD card. Just copy the firmware package mgb4-install.tgz to the first partition (fat, ext2/3/4) and create control flag mgb4-flash. Control flag mgb4-keep is ignored, keeping previous configuration doesn't work in this case. Then put the card into the box and power-on. From this point the firmware update continues similar to update by API. However, in this case the box shuts down when the process finishes.

Get content of firmware upload directory

curl 'http://192.168.1.200/api/fs/fw/'Firmware update (upload firmware package, upload control flags and reboot)

curl -T 'mgb4-install.tgz' 'http://192.168.1.200/api/fs/fw/'

curl -T 'mgb4-keep' 'http://192.168.1.200/api/fs/fw/'

curl -T 'mgb4-flash' 'http://192.168.1.200/api/fs/fw/'

curl -v -X POST 'http://192.168.1.200/api/app/actions/power/reboot'Each boot all plugged FG4 cards are checked if they contain valid firmware. Each FG4 card containing invalid firmware is updated automatically. The process of checking and possible updating is indicated by yellow power button LED. FG4 card firmware is considered as valid if it corresponds to the plugged module/interface (FPDL3, GMSL, ...) and if its version matches the one required by the box. Each FG4 Multibox contains its own list of FG4 card firmwares, that are marked as compatible and that are used for automatic FG4 card firmware update. For special use cases, the automatic firmware update may be disabled or even custom firmware files may be provided. To disable automatic firmware update create empty directory mgb4-fg4-firmware at the same place as FG4 Multibox firmware is uploaded, at URL path /api/fs/fw/ if using HTTP (WebDAV) server or at URL path /fw/ if using Samba server. To provide custom firmware files just upload them into mentioned mgb4-fg4-firmware directory. When multiple files of different versions are found, then the highest one is always selected. Note that overriding the normal behavior may lead to incompatible FG4 Multibox and FG4 card firmwares, so this feature is not intended to be used by ordinary user. Power cycle must be performed to finish the firmware update, this is also done automatically. It may happen, that something goes wrong during firmware update, in this case the box tries it again several times. If it fails anyway, the FG4 Multibox ends up with red power button LED, in this case the automatic firmware update must be disabled or the card must be removed. See FG4 PCIe cards for detailed information about FG4 card parameters.

The configuration of FG4 Multibox is a very complex topic, that can be described from many different perspectives. The first one involves the common features of Configuration API of Application service. The second one involves particular thematic components (video, network, storage, time, etc.) and can be seen across multiple chapters. Other perspectives, that involves other specific features are described in this chapter.

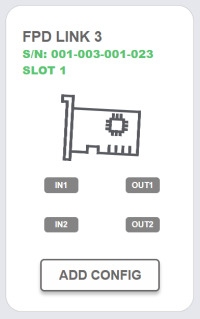

FG4 Multibox never adds/removes configurations for newly connected/disconnected physical devices automatically. It is always up to user to check the status, to see all present devices and to decide what to add/remove to/from the configuration. To make the situation with newly connected devices easier, there exists a way how to add configurations for not yet configured devices. Just call nonparametric synchronous action /api/app/actions/config/update. Next getting of /api/app/config returns the updated configuration. Configuration update involves devices at JSON pointers /network/devices, /can/devices, /video/captures and /video/outputs.

Sometimes it may be desired to return the configuration to its factory state (see here). To do it via API just call nonparametric synchronous action /api/app/actions/config/reset. Next getting of /api/app/config returns the factory configuration. To do it via SD card just create empty file mgb4-reset-config on the first partition, then insert the card to the box and reboot. Next getting of /api/app/config returns the factory configuration. Besides that, a specific custom configuration may be imported via SD card during boot. Just create empty file mgb4-reset-config on the first partition (as by factory reset) and also create file mgb4-config.json containing the custom configuration.

Basically there are two types of devices, system-managed and user-managed.

System-managed device is a device (usually physical), whose life cycle is controlled by operating system. Its name is assigned by system and cannot be changed in any way. No special consideration about its form (naming scheme) should be done, it is just a key identifying the device. Never ever parse the name to get any sort of information about the device. Existing system-managed device is always shown in status, regardless of being in configuration. System-managed devices are available at JSON pointers /network/devices, /can/devices, /video/captures, /fg4/devices, /video/outputs, /storage/disks and /storage/disks/<name>/partitions.

User-managed device is a device (usually virtual), whose life cycle is controlled by user. Its name is assigned by user, the form of name is also up to user, usually it only must be a string. Usually user can create as many user-managed devices as he wants. User-managed device is shown in status only when it exists in configuration. User-managed devices are available at JSON pointers /can/captures, /timer/devices and /storage/mounts.

Update configuration (add not yet configured devices)

curl -v -X POST 'http://192.168.1.200/api/app/actions/config/update'Reset configuration (to factory state)

curl -v -X POST 'http://192.168.1.200/api/app/actions/config/reset'The whole system log may be accessed via Logger service. Although the system log has many sources, the most important one is the Application service. This chapter describes how to set the logger of this service. The logger configuration is located at JSON pointer /logger.

Snippet of logger configuration (at JSON pointer /logger)

{

"filter": {

"severity": "error",

"components" : ["mgb4", "netctl"],

"threads" : ["main", "netctl"]

}

}Item severity is required and is also known as log level. Possible severities (sorted from lowest to highest) are trace, debug, info, warning, error and fatal. Only messages that are equal or higher then configured severity are logged. Be very careful with trace severity as it may generate a really huge amount of messages, e.g. each streamed video frame buffer generates multiple messages. For most debug cases, only debug or info severity should be enough.

Item components is optional and contains the names of components, that should be logged. When omitted, then all components are logged. Item threads is optional and contains the names of threads, that should be logged. When omitted, then all threads are logged. Both component and thread names are very internal and volatile attributes, not to be listed in this manual.

Item filter is optional and when omitted, then severity is defaulted to error and all components and threads are logged. Each message from application service has this form: thread |component|severity|message

Get logger configuration

curl -v -X GET 'http://192.168.1.200/api/app/config/logger'Set logger configuration

curl -v -X POST -H 'Content-Type: application/json' -d '{"filter":{"severity":"info"}}' 'http://192.168.1.200/api/app/config/logger'In general there exist multiple sources of time in FG4 Multibox, but only two of them are really important. The first one is system time, that provides information about current date and time. The second one is a special monotonic time, that is primarily used for timestamping of events. Time configuration and status are located at JSON pointer /time.

Snippet of time configuration (at JSON pointer /time)

{

"ntp": {

"enabled": true,

"servers": [

"0.cz.pool.ntp.org",

"1.cz.pool.ntp.org",

"2.cz.pool.ntp.org",

"3.cz.pool.ntp.org"

]

},

"timezone": "Europe/Prague"

}Snippet of time status (at JSON pointer /time)

{

"ntp": {

"root_delay": 11200778,

"root_dispersion": 36780,

"running": true,

"source": {

"name": "82.202.70.139",

"stratum": 1

},

"system_time_error": 6670096,

"system_time_offset": -1032927,

"system_time_synchronized": true

},

"system_time": "2023-12-07T13:26:08+01:00",

"timestamp": {

"ts_monotonic": 477042312369,

"ts_system": 1727334221461629400

}

}System time (aka real-time clock or RTC) provides information about current date and time. Basically it can be controlled in two ways, either it can be directly set to specific value or it is controlled by NTP.

To set the specific time value just call parametric synchronous action /api/app/actions/time/system_time/set with parameter containing the time value. To get the current time value just call nonparametric synchronous action /api/app/actions/time/system_time/get, the time value is then contained in response. In both cases the time value must comply with full date and time according to ISO 8601, e.g. "2023-12-07T13:26:08+01:00". Setting the time by mentioned action has no effect when NTP is enabled.

To enable synchronization over NTP, just fill the servers with desired NTP servers and set enabled to true. The NTP status properties are:

running - If true, then NTP is running.

system_time_synchronized - If true, then time is beeing synchronized.

system_time_error - Time error (in nanoseconds). Valid only if NTP is synchronized. Computed by formula: abs(system_time_offset) + root_dispersion + (root_delay / 2).

system_time_offset - Time offset (in nanoseconds). Valid only if NTP is synchronized.

root_delay - Root delay (in nanoseconds). Valid only if NTP is synchronized.

root_dispersion - Root dispersion (in nanoseconds). Valid only if NTP is synchronized.

source - Time source currently used for synchronization.

source/name - Name (IP address).

source/stratum - Stratum.

As for NTP status, only running and system_time_synchronized properties are required, the other ones are present only if NTP is running.

Whenever the NTP starts running, it sends the burst of 4 - 8 requests to the configured servers. Then they are polled each 64 - 1024 seconds, as NTP requires. As soon as any server matches required criteria, it is selected as the source (property source) and the system time starts being synchronized (property system_time_synchronized gets true). When the source gets suddenly unavailable, it doesn't automatically mean, that also the time stops immediatelly be sychnronized. The algorithm is in progress, it still continuously estimates the right time, still tries to reach the server, until it gives it up. It may take some time. To restart the NTP algorithm progress, the whole NTP must be restarted (by setting property enabled to false and then to true again). Usually the NTP corrects the offset by slowing down or speeding up the time (so called slewing or monotonical advancing), without any steps. Only after the NTP starts runnig and the offset is greater then 1 second and no more then 3 corrections happened, then it may do a step correction.

Whenever the NTP is runnig, it also acts as NTP server listening on UDP port 123. Also when the system time is not being synchronized, the NTP server still may be used as source of time. In this case the NTP server pretends to be synchronized and announces its stratum as 10. This may be useful, when machines must be synchronized, but the true world real-time doesn't matter (e.g. working on local network without access to internet). To intentionally disable the synchronization of local system time, while the NTP server functionality is preserved, just empty the servers property (enabled property must still be set to true).

It is quite important to have well-adjusted system time. If not, then interaction with FG4 Multibox may be very confusing at some points. Especially in timestamped data streams (e.g. CAN messages, video frames or triggers) if system time is selected to represent their timestamps. Also Logger service may be affected as it uses system time to timestamp the messages.

Whenever the system time is selected for representing timestamps and raw integer format (nanoseconds, microseconds, etc.) is used, it always counts from 1970-01-01 00:00:00 UTC, without considering leap seconds. This kind of representation is also known as Unix time.

Monotonic time is a special type of time, implemented by monotonically increasing counter, that starts its counting at some point during FG4 Multibox boot sequence. Due to its monotonic nature it is very suitable for timestamping events. Whenever the monotonic time is selected for representing timestamps, only raw integer format (nanoseconds, microseconds, etc.) is used. There is no global configuration or status.

Many events on FG4 Multibox are timestamped, e.g. CAN messages, video frames, triggers, etc. Although the timestamps are usually expressed in micro or nanoseconds, the actual precision is much lesser, typically in ones or even tens of milliseconds. It depends on the actual moment the timestamp is assigned, it also depends on the whole system load.

Most events are natively timestamped using monotonic time base. But whatever time base is used, before the events are presented to user, their timestamps may usually be converted to another time base. Actually only these two time bases are available, monotonic and system. When the conversion is required, then samples of monotonic and system times are taken at 'the same' time and the difference is used for conversion from native time base to another one. Of course it is not possible to take the samples exactly at the same time, but some efford is done and the difference jitters somewhere around microsecond decimal place. Which is far better than the average precision of assigning timestamps to all timestampable events on FG4 Multibox.

It is possible for user to convert between time bases by himself, because the 'concurrent' samples of monotonic and system times are presented in status. They are taken each second.

ts_monotonic - timestamp based on monotonic timer, in nanoseconds. It starts its counting at some point during FG4 Multibox boot sequence.

ts_system - timestamp based on system timer (aka real-time clock, RTC), in nanoseconds. It starts its counting at 1970-01-01 00:00:00 UTC, without considering leap seconds. It is also known as Unix time.

There exist another types of timestamps. But their source timers are unique to specific places and to specific situations, they cannot be shared across the whole system. E.g. the stream type, whose source timer exists only when its corresponding pipeline (trigger, video, CAN, ...) exists and that usually starts its counting when the pipeline begins to stream the data (triggers, video frames, CAN messages). Each pipeline can have its own stream timer.

List of all existing json pointers (across the whole configuration), at which the timestamp type can be set:

/trigger/timestamp/type (monotonic, system)/video/captures/<name>/timestamp/osd/binary/type (monotonic, system)/video/captures/<name>/timestamp/osd/text/type (monotonic, system)/video/captures/<name>/sinks/image/encoder/png/timestamp/type (monotonic, system)/video/captures/<name>/sinks/video/muxer/mpegts/timestamp/type (monotonic, stream)/can/captures/<name>/encoder/canutils/timestamp/type (monotonic, system)Set current time

curl -v -X POST -H 'Content-Type: application/json' -d '"2023-12-07T15:08:32+01:00"' 'http://192.168.1.200/api/app/actions/time/system_time/set'Get current time (by calling action)

curl -v -X POST 'http://192.168.1.200/api/app/actions/time/system_time/get'Get current time (by getting status)

curl -v -X GET 'http://192.168.1.200/api/app/status/time/system_time'Network configuration and status are located at JSON pointer /network. Configuration and status of particular network devices are located at JSON pointer /network/devices. FG4 Multibox contains one embedded network device, usually named as eth0. The name of embedded network device may change if another network device is connected. This usually happens when the device is connected via PCI. On the other hand, this usually doesn't happen when the device is connected via USB. Network devices are System-managed, so their names are fully controlled by operating system.

Snippet of network configuration and status (at JSON pointer /network)

{

"devices": {

"eth0": {...},

"eth1": {...},

"eth2": {...}

}Snippet of network device configuration (at JSON pointer /network/devices/eth0)

{

"addresses": [

{

"address": "192.168.1.226/24",

"scope": "global"

}

],

"dhcp4_server": {

"enabled": false,

"pool_offset": 100,

"pool_size": 32

},

"dhcp_client": {

"enabled": false,

"metric": 1024,

"type": "ipv4"

},

"enabled": true,

"nameservers": [

"192.168.1.1"

],

"routes": [

{

"destination": "0.0.0.0/0",

"gateway": "192.168.1.1",

"metric": 0,

"scope": "global"

}

]

}Snippet of network device status (at JSON pointer /network/devices/eth0)

{

"addresses": [

{

"address": "192.168.1.226/24",

"dynamic": false,

"scope": "global",

"secondary": false

},

{

"address": "fe80::4ab0:2dff:fe48:d0d7/64",

"dynamic": false,

"scope": "link",

"secondary": false

}

],

"mac_address": "48:b0:2d:48:d0:d7",

"operational_state": "up"

}Each network device may have assigned multiple IPv4 and IPv6 addresses, routes and name servers, either statically (by user) or dynamically (by DHCP server). Each network device is able to provide DHCPv4 server. When the network device is enabled and has assigned static IP address (minimum useful configuration), it also has to be connected to real functional network to see both the expected up operational state and configured IP address in status.

To enable network device just set enabled to true. Real device state can be observed in status operational_state, which can be one of these values unknown, notpresent, down, lowerlayerdown, testing, dormant and up. Typically only down and up are used most of the time.

To set static IP addresses fill addresses array. Each item contains IPv4 or IPv6 address (in CIDR notation) and its scope (global, link, host). If there are any doubts about address scope, go with global value. All assigned addresses can be observed in status addresses. Except address and scope there are some additional items. Item dynamic is true if address is assigned by DHCP server. Item secondary is true if address is so called secondary (aliased, not primary). Each network device may have assigned only one primary IPv4 and one primary IPv6 address, so additionally assigned addresses to the same network device are considered as secondary ones.

To set static routes fill routes array. Each item contains destination prefix (IP address in CIDR notation, use 0.0.0.0/0 (IPv4) or ::/0 (IPv6) to specify all possible addresses), gateway IP address, metric (unsigned integer, lower value means higher priority) and scope (global, link, host). If there are any doubts about route scope, go with global value. Optionally source prefix (IP address) may be set.

To set static name servers fill nameservers array. Each item represents IP address of name server.

To set dynamic IP addresses (also routes and name servers) from DHCP server configure DHCP client at dhcp_client. To have working DHCP client set type to requied IP address protocol version (ipv4, ipv6, dual), also set metric (unsigned integer, lower value means higher priority) to dynamically created route and finally set enabled to true.

Each network device is able to provide DHCPv4 server, configurable at dhcp4_server. To have working DHCPv4 server just set enabled to true. There are also some optional items allowing to configure the pool of IP addresses (to be leased). Item pool_offset represents the offset of the pool from the start of subnet. If omitted or zero, then the pool starts at the first address after the subnet address. Item offset_size represents number of addresses in the pool. If omitted or zero, then the pool takes up the rest of the subnet.

Get status of network device eth0

curl -v -X GET 'http://192.168.1.200/api/app/status/network/devices/eth0'Set static IPv4 address to network device eth1

curl -v -X POST -H 'Content-Type: application/json' -d '[{"address":"192.168.2.200/24","scope":"global"}]' 'http://192.168.1.200/api/app/config/network/devices/eth1/addressesEnable network device eth1

curl -v -X POST -H 'Content-Type: application/json' -d 'true' 'http://192.168.1.200/api/app/config/network/devices/eth1/enabled'Storage configuration and status are located at JSON pointer /storage. The API is divided into two main parts. The first one (named as Disks) provides information about all connected storage devices. The second one (named as Mounts) allows to mount (attach) specified storage devices to their mount points (directories) and thus allowing access to them.

Snippet of storage configuration (at JSON pointer /storage)

{

"mounts": {...}

}Snippet of storage status (at JSON pointer /storage)

{

"disks": {...},

"mounts": {...}

}Information about all connected storage devices is available in status at JSON pointer /storage/disks. There is no configuration available.

Snippet of disks status (at JSON pointer /storage/disks)

{

"mmcblk2": {

"partitions": {

"mmcblk2p1": {

"fstype": "vfat",

"label": "SDCARD",

"partuuid": "7de4bdea-01",

"size": 15551430656,

"uuid": "30D4-034F"

}

},

"size": 15552479232

},

"sda": {

"model": "Samsung SSD 870 ",

"partitions": {

"sda1": {

"fstype": "ext4",

"label": "SATADISK",

"partuuid": "0f58d681-01",

"size": 500106813440,

"uuid": "a91b29f2-e8d4-4353-bbc9-7777d9f7cdf9"

}

},

"size": 500107862016,

"vendor": "ATA "

},

"sdb": {

"model": "VoyagerGT ",

"partitions": {

"sdb1": {

"fstype": "vfat",

"label": "FLASH",

"partuuid": "d353cb65-01",

"size": 16239296512,

"uuid": "40EB-8311"

}

},

"size": 16240345088,

"vendor": "Corsair "

},

"sdc": {

"fstype": "vfat",

"label": "FLASH",

"model": "Cruzer Fit ",

"size": 8002732032,

"uuid": "E8CB-810D",

"vendor": "SanDisk "

}

}Storage devices are System-managed, so their names are fully controlled by operating system. Usually SATA and USB device names are in form of sdX, where X is an alphabetical character distinguishing the devices and respecting their connection order (the first device has assigned name sda, the second one has assigned name sdb, etc.). Usually MMC device names are in form of mmcblkX, where X is a number distinguishing the devices and respecting their connection order (the first device has assigned name mmcblk0, the second one has assigned name mmcblk1, etc.). FG4 Multibox already contains some private embedded MMC devices, so the first user connected device is named as mmcblk2 (actually this is also the last one as FG4 Multibox contains only one physical SD card interface).

Each storage device may contain partitions, which are also system-managed devices. Usually partition names of SATA and USB storage device are constructed by appending partition number to storage device name (sda device may contain partitions named as sda1, sda2, etc.). Usually partition names of MMC storage device are constructed by appending character p and partition number to storage device name (mmcblk2 device may contain partitions named as mmcblk2p1, mmcblk2p2, etc.).

The type of file system is given by item fstype. When this item exists, the file system is known and the corresponding storage device or partition is mountable. Note that there may exist storage device, that doesn't contain any partitions (no partition table), but still it may be mountable. In this case the item fstype is contained at root level of storage device, see sdc device in previous snippet.

All sizes are in bytes.

Mounts configuration and status are located at JSON pointer /storage/mounts.

Snippet of mounts configuration (at JSON pointer /storage/mounts)

{

"ramdisk": {

"device": "tmpfs",

"enabled": true,

"options": "size=128M"

},

"satadisk": {

"device": "sda1",

"enabled": true

},

"sdcard": {

"device": "mmcblk2p1",

"enabled": true

},

"usbdisk": {

"device": "sdb1",

"enabled": false

},

"usbdisk_private": {

"device": "uuid=40EB-8311",

"enabled": true

}

}Snippet of mounts status (at JSON pointer /storage/mounts)

{

"ramdisk": {

"device": "tmpfs",

"size": {

"total": 134217728,

"used": 0

}

},

"satadisk": {

"device": "sda1",

"size": {

"total": 491182030848,

"used": 75509760

}

},

"sdcard": {

"device": "mmcblk2p1",

"size": {

"total": 15536226304,

"used": 476225536

}

},

"usbdisk_private": {

"device": "sdb1",

"size": {

"total": 16223436800,

"used": 16384

}

}

}To have access to connected storage device, it must be mounted (attached) to specified mount point (directory). The content of storage device can be then accessed via Filesystem service. To mount the storage device, just create/update appropriate object in configuration at JSON pointer /storage/mounts. The key represents mount point and the value contains device to be mounted (item device, required), enabled flag (item enabled, required), file system type (item fstype, optional) and mount options (item options, optional). Mount point name is just an user defined string, but it must not be empty and it must not contain the forward slash character "/" (ASCII 0x2F). The only required items specifying the mounted device are device name and enabled flag. In most cases file system is detected automatically and mount options are not required at all. Device name is either the partition name (in case of partitioned disk, can be found in status at JSON pointer /storage/disks/<disk_name>/partitions/<partition_name>) or the disk name (in case of not partitioned disk, can be found in status at JSON pointer /storage/disks/<disk_name>). When (and only when) the device is really mounted to its mount point, it is also present in status at JSON pointer /storage/mounts. The key represents mount point and the value contains name of mounted device (item name, required) and size information (item size, optional). Sometimes, when device is just mounted, getting information about its size may take a long time, so the item size may occur in status at later time.

The device can also be mounted by specifying its uuid, label, partuuid or partlabel, can be found in status at JSON pointers /storage/disks/<disk_name>/partitions/<partition_name>/uuid|label|partuuid|partlabel or /storage/disks/<disk_name>/uuid|label. In this case specify the device name as uuid=<value>, label=<value>, partuuid=<value> or partlabel=<value>. This kind of device specification provides solution for unpredictable disk and partition names.

There exists a very special mountable storage device, that stores data in physical RAM, often simply called as ramdisk. It is very fast, but also volatile and very limited in size. The name of this device is tmpfs. The contained file system is also tmpfs. The size of mounted device can be set by mount option size=<value><unit>. The unit can be one of K, M or G, for value in kibi, mebi or gibi bytes. The unit can also be %, for value in percentage of physical RAM. When no unit is specified, then value is considered in bytes. When no size option is specified, then it is defaulted to size=50%. Note that the required size is always rounded up to multiple of entire physical RAM page, which is 4096 bytes. The special tmpfs device can be mounted multiple times at the same time. Don't set the size to large numbers, the system may then start to behave in a very non-standard way (when the Linux system is out of memory, it may start swapping or OOM killer may be triggered or something worse may happen).

The item options contains comma-separated mount options. In most cases they are not required at all. Actually the options string is directly given to the Linux 'mount' command, so any supported option may be given here. Be careful when using this parameter. When mounting tmpfs device, its size may be specified by size option (see the above paragraph). When read-only mounting is required, then add ro option. There are some options, that are applied automatically, e.g. for vfat file system option utf8 is applied, for ntfs file system option windows_names is applied.

Supported file systems: vfat (fat16/32), exfat, ntfs, ext2, ext3, ext4, tmpfs.

All sizes are in bytes.

ATTENTION:

Be sure the storage device is unmounted before disconnecting from FG4 Multibox. Othewise some data may get lost or even the file system may get corrupted. When the storage device is instructed to be unmounted, but it still remains mounted, then in most cases it is actually still used. Maybe some files remain still open, maybe some data are not yet completely flushed to storage.

Get list of connected storage devices

curl -v -X GET 'http://192.168.1.200/api/app/status/storage/disks'Get list of mounted storage devices (mount points)

curl -v -X GET 'http://192.168.1.200/api/app/status/storage/mounts'Create configuration for mounting to mount point mntpt (device to be mounted is set to sda1, but the mounting is still disabled for now)

curl -v -X POST -H 'Content-Type: application/json' -d '{"device":"sda1","enabled":false}' 'http://192.168.1.200/api/app/config/storage/mounts/mntpt'Enable mounting to mount point mntpt (when the device is connected and contains supported file system, it will be mounted)

curl -v -X PUT -H 'Content-Type: application/json' -d 'true' 'http://192.168.1.200/api/app/config/storage/mounts/mntpt/enabled'Disable mounting to mount point mntpt (when the device is mounted, it will be unmounted)

curl -v -X PUT -H 'Content-Type: application/json' -d 'false' 'http://192.168.1.200/api/app/config/storage/mounts/mntpt/enabled'Delete configuration for mounting to mount point mntpt

curl -v -X DELETE 'http://192.168.1.200/api/app/config/storage/mounts/mntpt'See the Filesystem service examples how to manipulate the files on mounted devices.

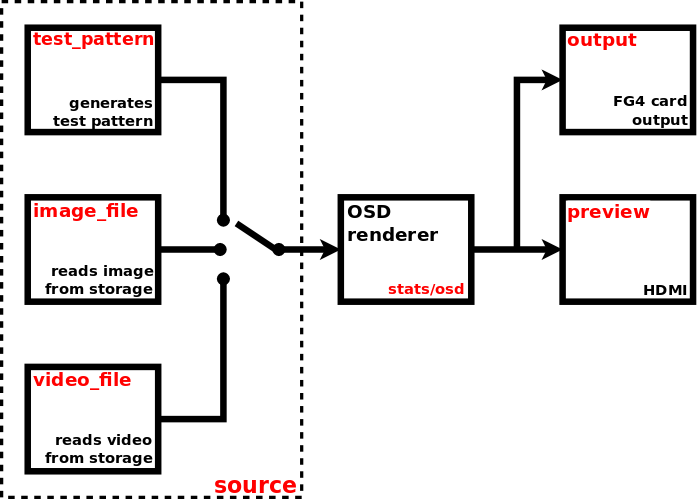

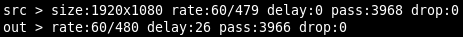

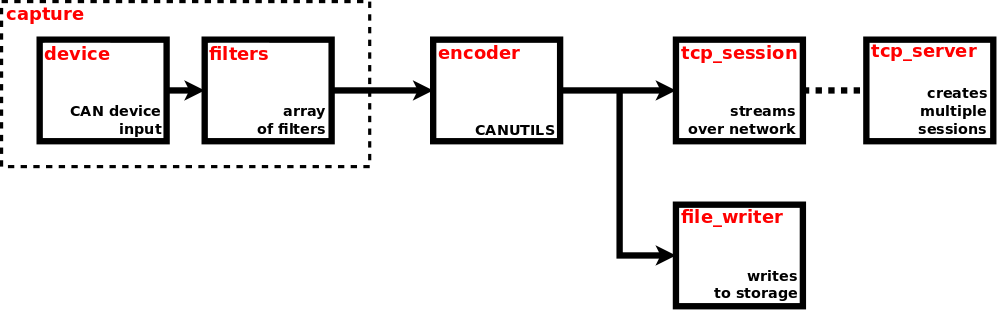

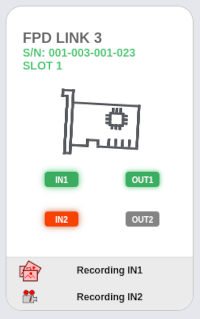

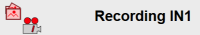

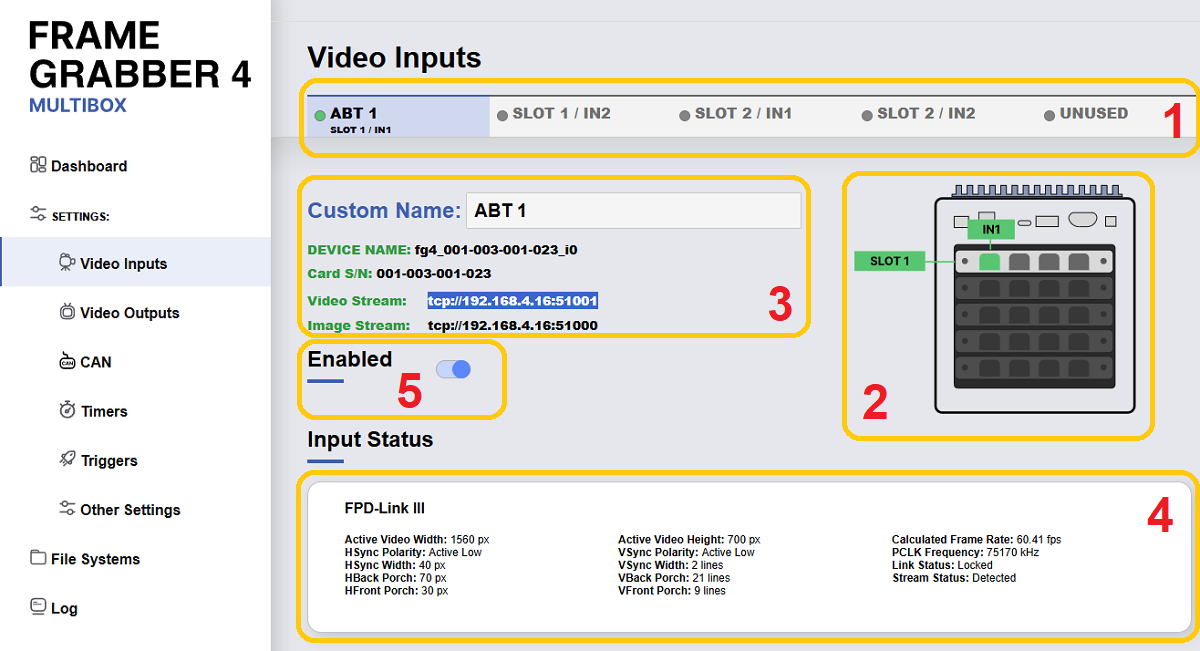

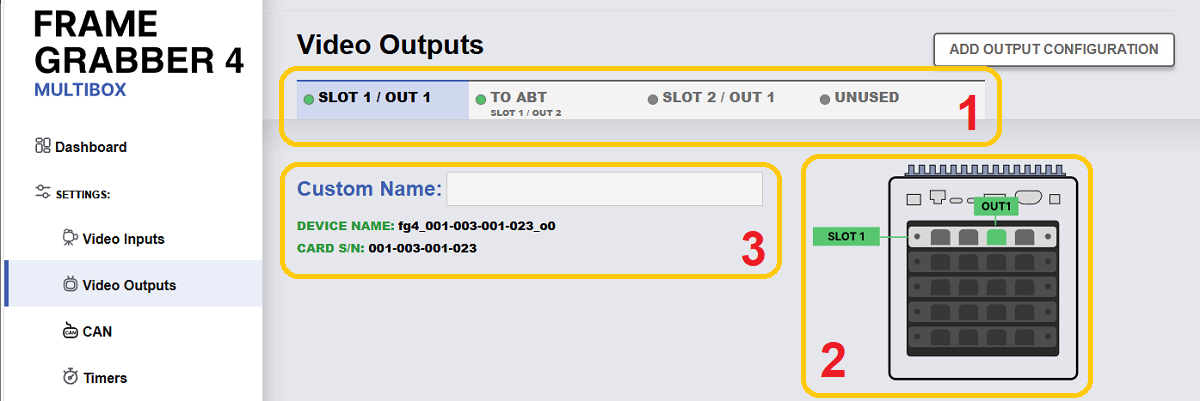

Video configuration and status are located at JSON pointer /video. The API consists of two main parts. The first one (named as video captures) represents the video pipelines, responsible for receiving video frames from physical device (e.g. any video input located on FG4 card's FPDL3/GMSL interface), encoding them and transmitting via network or saving to storage. The second one (named as video outputs) represents the video pipelines, responsible for reading video frames from specified source (e.g. video file, image file or test pattern generator) and transmitting them to physical device (e.g. any video output located on FG4 card's FPDL3 interface). Each capture and output pipeline is identified by its unique name (/video/captures/<name>, /video/outputs/<name>). Actually this name is also the name of physical device, the video frames are received from or transmitted to. It simply means, that each capture and output pipeline has its own associated physical device. Both the capture and output pipelines are System-managed devices, so their names (and consequently the names of associated physical devices) are fully controlled by operating system.

Snippet of video configuration and status (at JSON pointer /video)

{

"captures": {

"fg4_001-003-001-018_i0": {...},

"fg4_001-003-001-018_i1": {...}

},

"outputs": {

"fg4_001-003-001-018_i0": {...},

"fg4_001-003-001-018_i1": {...}

}

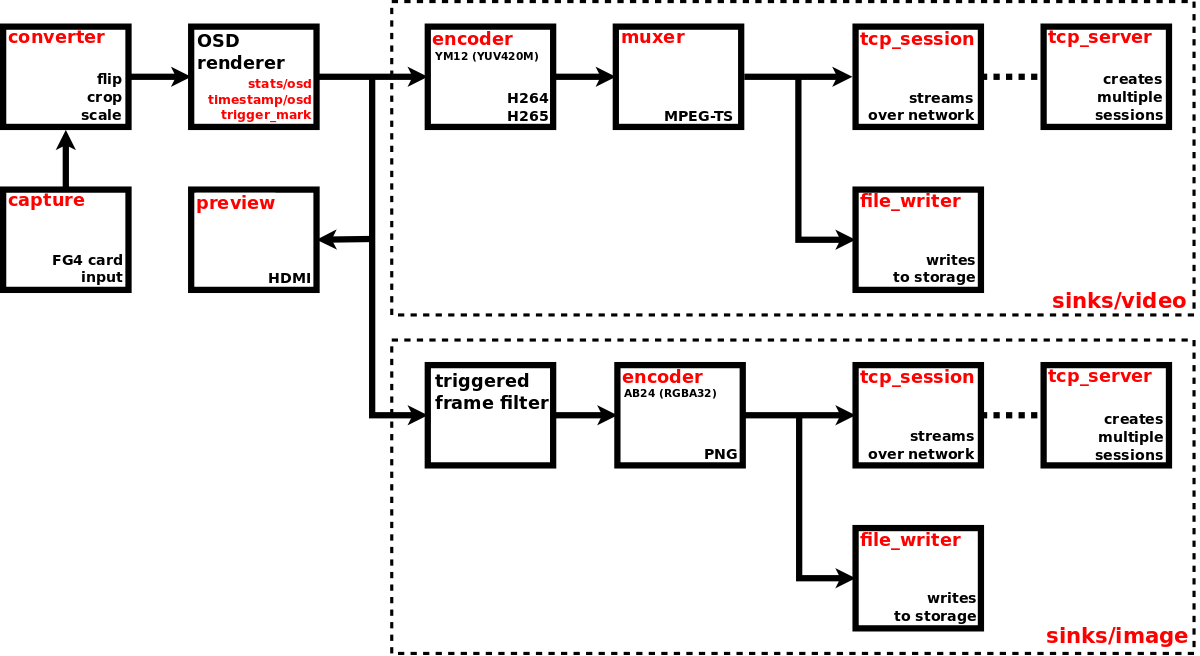

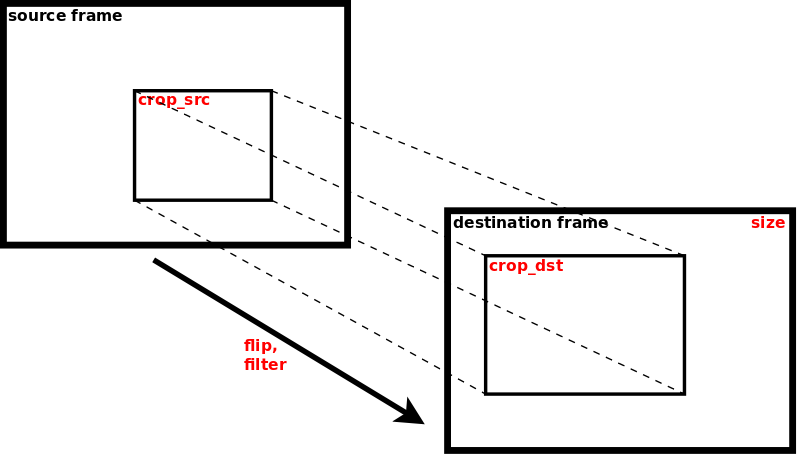

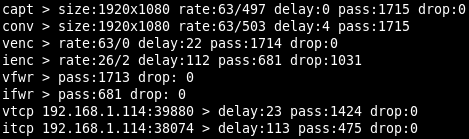

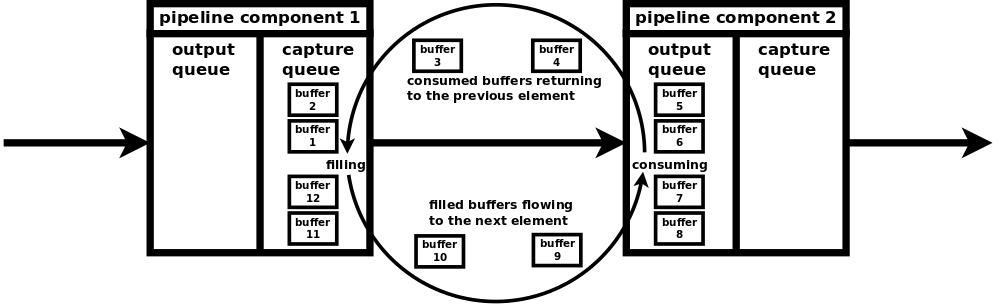

}Next picture shows the component diagram of complete video capture pipeline, with arrows indicating the flow of video frames. Red labels are the names of corresponding objects in configuration and status JSONs, their simplified snippets are shown below the diagram.

Snippet of video capture pipeline configuration (at JSON pointer /video/captures/fg4_001-003-001-018_i0)

{

"enabled": true,

"capture": {

"fg4": {...}

},

"nosignal": {

"enabled": false,

...

},

"converter": {...},

"preview": {

"enabled": false,

...

},

"sinks": {

"image": {

"enabled": false,

"triggered_mode": false,

"encoder": {...},

"file_writer": {...},

"tcp_server": {...},

"tcp_session": {...}

},

"video": {

"enabled": true,

"encoder": {...},

"muxer": {...},

"file_writer": {...},

"tcp_server": {...},

"tcp_session": {...}

}

},

"stats": {...},

"timestamp": {...},

"trigger_mark": {...}

}Snippet of video capture pipeline status (at JSON pointer /video/captures/fg4_001-003-001-018_i0)

{

"running": true,

"capture": {

"fg4": {

"running": true,

...

}

},

"nosignal": {

"running": false

},

"preview": {

"running": false

},

"sinks": {

"image": {

"running": false,

"file_writer": {...}

},

"video": {

"running": true,

"file_writer": {...}

}

},

"stats": {...}

}To enable the video capture pipeline, just set enabled to true. See running property in status to get the real state of video capture pipeline. If the running property is true, then at least capture device or no-signal generator is running. Most individual pipeline components have their own enable (configuration) and running (status) properties. To have the running video capture pipeline, that is fed from capture device, some conditions must be met. Primarily the video capture pipeline must be enabled, the associated physical device must exist and must be ready to provide the video stream (i.e. in case of grabber device, there must be a valid video signal on its input interface).

The following links help to navigate to particular pipeline components (and other stuff).

Currently only one type of physical capture device is supported, namely FG4. So this chapter covers only working with FG4. The configuration and status are located at JSON pointer /video/captures/<name>/capture/fg4.

Snippet of video capture device configuration (at JSON pointer /video/captures/fg4_001-003-001-018_i0/capture/fg4)

{

"format": {

},

"trigger_sources": {

"signal" : false

},

"interface": {

"fpdl3": {

"color_mapping": "spwg_vesa",

"fpdl3_input_width": "automatic",

"frequency_range": "pll_greater_or_equal_50mhz",

"hsync_gap_length": 1000,

"oldi_lane_width": "dual",

"vsync_gap_length": 2

},

"gmsl": {

"color_mapping": "spwg_vesa",

"frequency_range": "pll_greater_or_equal_50mhz",

"gmsl_fec": "enabled",

"gmsl_mode": "br_12000M",

"gmsl_stream_id": 1,

"hsync_gap_length": 1000,

"oldi_lane_width": "dual",

"vsync_gap_length": 2

}

}

}Snippet of video capture device status (at JSON pointer /video/captures/fg4_001-003-001-018_i0/capture/fg4)

{

"running": true,

"parent_device": "001-003-001-018",

"format": {

"fourcc": "AR24",

"rate": [150336000, 2391660],

"size": [1920, 1080]

},

"format_descriptions": [

{

"fourcc": "AR24",

"rates": [

[

150336000,

2391660,

125000000,

4294967295,

125000000,

1

]

],

"size": [1920, 1080]

},

{

"fourcc": "YUYV",

"rates": [

[

150336000,

2391660,

125000000,

4294967295,

125000000,

1

]

],

"size": [1920, 1080]

}

],

"interface": {

"fpdl3": {

"color_mapping": "spwg_vesa",

"fpdl3_input_width": "automatic",

"frequency_range": "pll_greater_or_equal_50mhz",

"hback_porch": 50,

"hfront_porch": 50,

"hsync_gap_length": 1000,

"hsync_status": "active_low",

"hsync_width": 40,

"input_id": 0,

"link_status": "locked",

"oldi_lane_width": "dual",

"pclk_frequency": 150338,

"stream_status": "detected",

"vback_porch": 31,

"vfront_porch": 30,

"video_height": 1080,

"video_width": 1920,

"vsync_gap_length": 2,

"vsync_status": "active_low",

"vsync_width": 20

}

}

}This type of video capture is a part of FG4 card (actually it represents an input of the interface module), so there exists a reference to this card, property parent_device. It contains the name of parent FG4 card, whose properties can be found at JSON pointer /fg4/devices/<name>. See FG4 PCIe cards chapter to get detailed information.

In general a capture device acts as main source of video frames for the whole video capture pipeline. Only when the element format is present in status, the capture device is ready to provide the video stream (i.e. in case of FG4 card there is a valid video signal on its input interface). The format describes the stream, that is provided by the capture device. When the capture device is running, then format describes the actually flowing stream. When the capture device is not running, then format describes the stream, that will be flowing when the pipeline is enabled (and consequently running) and no custom format is specified. The format has these properties:

fourcc - Pixel format represented as FourCC.

size - Frame size [width, height], in pixels.

rate - Frame rate represented as rational number [num, den], in frames per second.

It is allowed to specify a custom format. If the element format is present in configuration, the capture device tries to provide the stream in specified format. If the specified format cannot be used, then some other is used. Again, when pipeline is running, check format in status to get the actually used format. In configuration the element format is optional and also its all three sub-elements fourcc, size and rate are optional. Currently only fourcc and rate are evaluated, size is ignored. Possible format values can be determined from status element format_descriptions. It is actually an array of allowed formats, where each element has these properties:

fourcc - Pixel format represented as FourCC.

size - Frame size, in pixels. It is represented as [width, height] in case of discrete size, or as [min_width, min_height, max_width, max_height, step_width, step_heigth] in case of step-wise size.

rates - Array of frame rates, in frames per second. Each one is represented as [num, den] in case of discrete rate, or as [max_num, max_den, min_num, min_den, step_num, step_den] in case of step-wise rate. In calculations step must be used as period (not frame rate), with flipped numerator and denominator, e.g. custom_rate = 1/(min_den/min_num + 10 * step_den/step_num).

Element fourcc may contain only AR24 or YUYV. Pixel format AR24 is ABGR with four bytes per pixel, pixel format YUYV is YUV 4:2:2 with four bytes per two pixels. So the YUYV takes only half the bandwidth compared to AR24. On the other hand it may loose some information, because e.g. FPDL3/GMLS interfaces use the RGB with three bytes per pixel. Omit the fourcc to use the default AR24.

Element rate may be used to set a custom fixed frame rate, which must always be less than the one being captured on hardware interface. It may be useful to reduce the bandwith. Omit the rate to use the value captured on interface.

Element size is ignored as it is always used the value captured on interface.

Although custom pixel format may be specified, after capturing into the pipeline the pixel format is always transformed to the format required by the following pipeline components, e.g. video stream (H264, H265) usually uses YM12 (YUV 4:2:0 planar), image stream (PNG) uses AB24 (RGBA with four bytes per pixel).

Each FG4 capture device can act as trigger source within the Trigger system. The individual sources can be configured in trigger_sources element. Currently there is only one boolean configuration property signal, which enables or disables all input signal related trigger sources. These sources are /video/captures/<name>/capture/fg4/signal/change/on and /video/captures/<name>/capture/fg4/signal/change/off, which generate their triggers whenever a change of video signal occurs on input of FG4 capture device. Value on means, that immediately after the change a valid video signal was detected. Value off means, that immediately after the change no valid video signal was detected.

Each FG4 card may contain an interchangable part named as interface module (or just interface). Each interface may contain one or more video inputs. Each video input is represented by this capture device. Check the status interface element to get the actually used interface type (it contains exactly one sub-element, fpdl3 or gmsl). Each interface contains a number of properties, whose actual values are available in status. Some of them can be set to custom value, just put the property into configuration. To use the default (or last set) value, just omit the property from configuration. Currently FPDL3 and GMSL interfaces are supported.

List of all existing properties (available in status):

input_id - integer. Input number ID, zero based.

oldi_lane_width - string enum (single, dual). Number of deserializer output lanes.

color_mapping - string enum (oldi_jeida, spwg_vesa). Mapping of the incoming bits in the signal to the colour bits of the pixels.

link_status - string enum (unlocked, locked). Video link status. If the link is locked, chips are properly connected and communicating at the same speed and protocol. The link can be locked without an active video stream.

stream_status - string enum (not_detected, detected). Video stream status. A stream is detected if the link is locked, the input pixel clock is running and the DE signal is moving.

video_width - integer. Video stream width. This is the actual width as detected by the HW.

video_height - integer. Video stream height. This is the actual height as detected by the HW.

vsync_status - string enum (active_low, active_high, not_available). The type of VSYNC pulses as detected by the video format detector.

hsync_status - string enum (active_low, active_high, not_available). The type of HSYNC pulses as detected by the video format detector.

vsync_gap_length - integer. If the incoming video signal does not contain synchronization VSYNC and HSYNC pulses, these must be generated internally in the FPGA to achieve the correct frame ordering. This value indicates, how many empty pixels (pixels with deasserted Data Enable signal) are necessary to generate the internal VSYNC pulse.

hsync_gap_length - integer. If the incoming video signal does not contain synchronization VSYNC and HSYNC pulses, these must be generated internally in the FPGA to achieve the correct frame ordering. This value indicates, how many empty pixels (pixels with deasserted Data Enable signal) are necessary to generate the internal HSYNC pulse. The value must be greater than 1 and smaller than vsync_gap_length.

pclk_frequency - integer. Input pixel clock frequency in kHz.

hsync_width - integer. Width of the HSYNC signal in PCLK pulses.

vsync_width - integer. Width of the VSYNC signal in video lines.

hback_porch - integer. Number of PCLK pulses between deassertion of the HSYNC signal and the first valid pixel in the video line (marked by DE=1).

hfront_porch - integer. Number of PCLK pulses between the end of the last valid pixel in the video line (marked by DE=1) and assertion of the HSYNC signal.

vback_porch - integer. Number of video lines between deassertion of the VSYNC signal and the video line with the first valid pixel (marked by DE=1).

vfront_porch - integer. Number of video lines between the end of the last valid pixel line (marked by DE=1) and assertion of the VSYNC signal.

frequency_range - string enum (pll_less_than_50mhz, pll_greater_or_equal_50mhz). PLL frequency range of the OLDI input clock generator. The PLL frequency is derived from the Pixel Clock Frequency (PCLK) and is equal to PCLK if oldi_lane_width is set to single and PCLK/2 if oldi_lane_width is set to dual.

fpdl3_input_width - string enum (automatic, single, dual). Number of deserializer input lines.

List of configurable properties (may occur in configuration):

oldi_lane_width

color_mapping

vsync_gap_length

hsync_gap_length

frequency_range

fpdl3_input_width

The frame rate may be computed by the formula:

frame_rate = pclk_frequency * 1000/(total_width * total_height)

total_width = video_width + hfront_porch + hback_porch + hsync_width

total_height = video_height + vfront_porch + vback_porch + vsync_width

List of all existing properties (available in status):

input_id - integer. Input number ID, zero based.

oldi_lane_width - string enum (single, dual). Number of deserializer output lanes.

color_mapping - string enum (oldi_jeida, spwg_vesa). Mapping of the incoming bits in the signal to the colour bits of the pixels.

link_status - string enum (unlocked, locked). Video link status. If the link is locked, chips are properly connected and communicating at the same speed and protocol. The link can be locked without an active video stream.

stream_status - string enum (not_detected, detected). Video stream status. A stream is detected if the link is locked, the input pixel clock is running and the DE signal is moving.

video_width - integer. Video stream width. This is the actual width as detected by the HW.

video_height - integer. Video stream height. This is the actual height as detected by the HW.

vsync_status - string enum (active_low, active_high, not_available). The type of VSYNC pulses as detected by the video format detector.

hsync_status - string enum (active_low, active_high, not_available). The type of HSYNC pulses as detected by the video format detector.

vsync_gap_length - integer. If the incoming video signal does not contain synchronization VSYNC and HSYNC pulses, these must be generated internally in the FPGA to achieve the correct frame ordering. This value indicates, how many empty pixels (pixels with deasserted Data Enable signal) are necessary to generate the internal VSYNC pulse.

hsync_gap_length - integer. If the incoming video signal does not contain synchronization VSYNC and HSYNC pulses, these must be generated internally in the FPGA to achieve the correct frame ordering. This value indicates, how many empty pixels (pixels with deasserted Data Enable signal) are necessary to generate the internal HSYNC pulse. The value must be greater than 1 and smaller than vsync_gap_length.

pclk_frequency - integer. Input pixel clock frequency in kHz.

hsync_width - integer. Width of the HSYNC signal in PCLK pulses.

vsync_width - integer. Width of the VSYNC signal in video lines.

hback_porch - integer. Number of PCLK pulses between deassertion of the HSYNC signal and the first valid pixel in the video line (marked by DE=1).

hfront_porch - integer. Number of PCLK pulses between the end of the last valid pixel in the video line (marked by DE=1) and assertion of the HSYNC signal.

vback_porch - integer. Number of video lines between deassertion of the VSYNC signal and the video line with the first valid pixel (marked by DE=1).

vfront_porch - integer. Number of video lines between the end of the last valid pixel line (marked by DE=1) and assertion of the VSYNC signal.

frequency_range - string enum (pll_less_than_50mhz, pll_greater_or_equal_50mhz). PLL frequency range of the OLDI input clock generator. The PLL frequency is derived from the Pixel Clock Frequency (PCLK) and is equal to PCLK if oldi_lane_width is set to single and PCLK/2 if oldi_lane_width is set to dual.

gmsl_mode - string enum (br_12000M, br_6000M, br_3000M, br_1500M). GMSL speed mode.

gmsl_stream_id - integer. The GMSL multi-stream contains up to four video streams. This parameter selects which stream is captured by the video input. The value is the zero-based index of the stream.

gmsl_fec - string enum (disabled, enabled). GMSL Forward Error Correction (FEC).

List of configurable properties (may occur in configuration):

oldi_lane_width

color_mapping

vsync_gap_length

hsync_gap_length

frequency_range

gmsl_mode

gmsl_stream_id

gmsl_fec

The frame rate may be computed by the formula:

frame_rate = pclk_frequency * 1000/(total_width * total_height)

total_width = video_width + hfront_porch + hback_porch + hsync_width

total_height = video_height + vfront_porch + vback_porch + vsync_width

No-signal generator is a component allowing to generate auxiliary video signal. The configuration and status are located at JSON pointer /video/captures/<name>/nosignal.

Snippet of no-signal generator configuration (at JSON pointer /video/captures/fg4_001-003-001-018_i0/nosignal)

{

"enabled" : false,

"pattern" : "solid_magenta_100",

"format" : {

"size" : [640, 480],

"rate" : [60, 1]

},

"follow_capture_format": false

}Snippet of no-signal generator status (at JSON pointer /video/captures/fg4_001-003-001-018_i0/nosignal)

{

"running" : false

}When no signal is present on input of capture device, this generator can be used as alternate source of video signal. When both the signals from capture device and generator are of the same resolution, then the video sources can be swapped without recreating the pipeline, so the running stream will not be broken. Of course, there will always be a small gap between last frame from signal generator and first frame from capture device (and vice versa), typically 8 frames (required by FG4 PCIe card) + additional 50-100 ms (required by Jetson).

enabled - set to true to enable no-signal generator. See running status property to get the real state.

pattern - video signal pattern. It must contain one of these values:

- solid_black

- solid_white_100

- solid_red_100

- solid_green_100

- solid_blue_100

- solid_yellow_100

- solid_cyan_100

- solid_magenta_100

- text_nosignal_white_100

- text_nosignal_white_100_on_blue_100

The generated patterns (frames) are natively represented in RGB, each color component in range from 0x00 (0) to 0xFF (255). The postfix 100 implies the color components of value 0xFF (100).